How to Build, Train and Deploy Your Own Recommender System – Part 2

We build a recommender system from the ground up with matrix factorization for implicit feedback systems. We then deploy the model to production in AWS.

Github link to the full Capstone Project

This is a recap of my Capstone Project where I describe in high level detail the process I went through to complete the course, and achieve a machine learning model that can predict the winner of a 2021 Formula 1 race.

Before the start of this bootcamp, I had beginner level Python, even though I had been developing software for many years. I had attempted an end-to-end Data Science project once before, but when doing these type of things on your own, these are bound for failure, at least for me.

I cannot emphasize this enough, that courses like this GA Data Science bootcamp, will give you the best chance of achieving your goal. For me it was to be able to complete an end-to-end project. And I’m pretty proud of myself, but surely wouldn’t have done it if not for the course, very capable and supportive instructors and equally curious cohorts.

Ever since the first season of Drive to Survive , I’ve been captivated by the drama and excitement that is Formula 1. I’ve been consuming this public API in some of my past blog posts and I thought it would be fun to continue this trend and explore the insights and predictions that can be gleaned from past race data:

Even though this post is just skimming the surface, and is really meant as a high level summary, there are still lots of details in it, and will not be very interesting for many of you. If you are in this boat, please fast forward to the conclusion to skip through all the details.

Ergast Motor Racing has been publishing these Formula 1 results from 1950 up to the present. Majority of my data set will be from this API.

I will also be scraping some data from the following sites:

I’ve been a developer for some time now, and for a while I’ve been fascinated by machine learning. In software development, I can make computers do what I want, by programming them. However, in machine learning, you are not and cannot do that.

In machine learning, you need to prepare your data in such a way so that when you show this data to your algorithms, it can understand and see the patterns and can come to a conclusion, without being explicitly programmed to do so.

This is the part that perplexed me from the start, its kind of smoke and mirrors. However having successfully gone through the process, it all makes sense to me now. And working on another end-to-end project by myself is not that unconceivable anymore.

Although the data that we extracted from Ergast is great, it needs to be improved upon if we want to be able to successfully predict the race winner. Apart from the weather information that I scraped from an external source (Wikipedia), we already had all the data we need. We just needed to create “features” from the data already there. In Data Science, we call this Feature Engineering. If you want to see how I did these, please check it out here. At the end of this process, I had about 110 features.

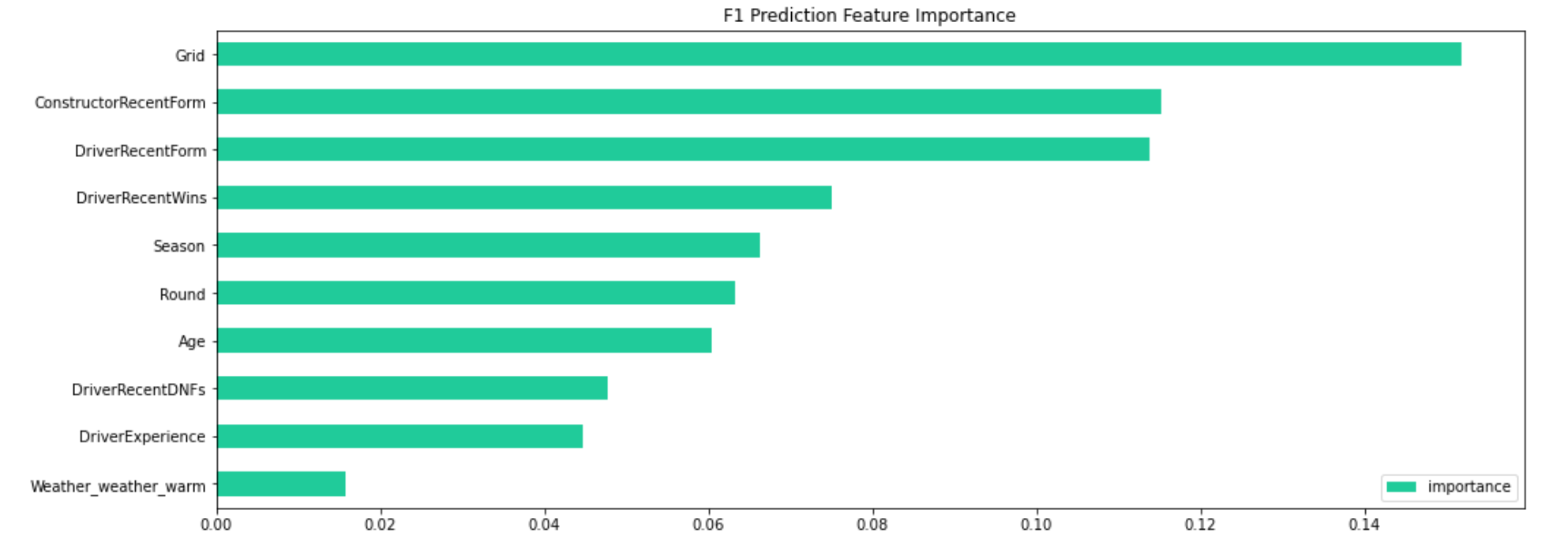

Next is to determine the importance of these features, since we don’t want to use all of them in the modelling stage. During this Feature Importance stage, we identify the most important and relevant features, and drop the rest. All these processes are all done with Python, here’s what I did to quantify the feature importance of the data.

Finally, once we have identified the relevant features, we can now safely drop the inconsequential features (that just contributes to model bloat), and keep the most significant ones. And finally, at this stage we are now ready to build our models.

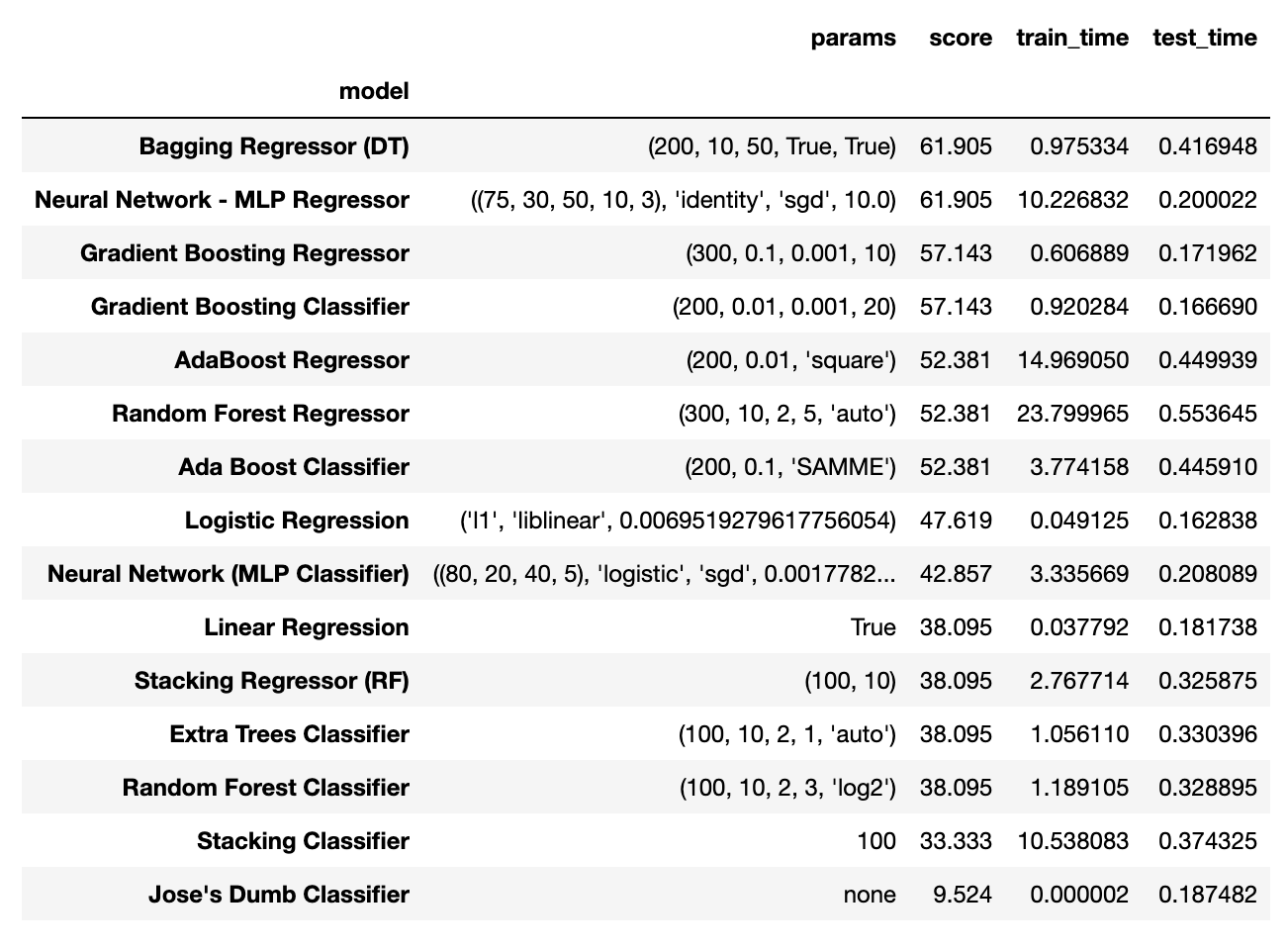

We have a multitude of machine learning algorithms that we can use to build our model. Since this is my first attempt I wanted to use as many models as I can. At this stage I didn’t really know about any of them, much less building one that can do predictions!

In this project we used 2 types of Supervised machine learning techniques. One is called Regression models , where this type can predict a continuous number like the finishing order. The second type is called Classification models , where I used it to predict if a driver Wins a race, or Not.

Python has made it relatively easy to build a model. There’s a library called Sci Kit Learn open source and free, where building a model can be as easy as finding an appropriate algorithm, supply the data, and parameters, and train it with your training data.

To find the most optimum parameters, this is called Hyperparameter tuning in machine learning-speak. There are libraries to do this easily, however I have opted to do this manually using Python for loops. I have tried the automated way, but for this project, I got better results without it.

I have actually combined my hyperparameter tuning with running the actual predictions, this took a while, and it depends on the type of algorithms you end up using, but for me initially it took more than 5 hours (I left it running overnight), and found out later that one of the Classification models I used (SVM) was so slow, without much difference as the other models which were much quicker to train. So I ditched that, and for all the models I used (15 in total including the Dumb Classifier), it took about 1.7 hours using my trusty old MacBook Pro.

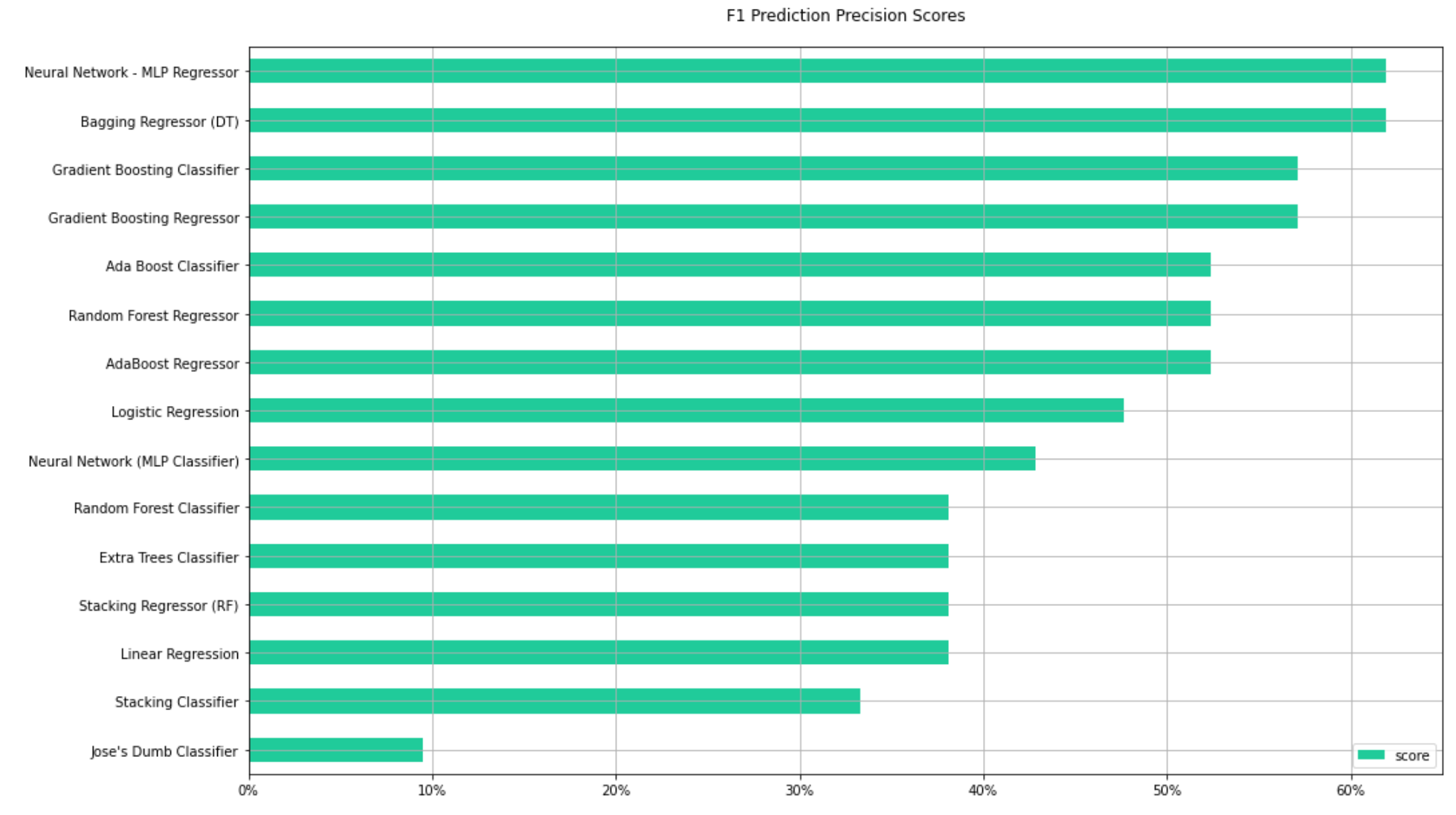

Following our Champion/Challenger methodology, the winning model is the one built with Bagging Regressor (Decision Tree Regressor). This is an ensemble model fron SKLearn which uses DecistionTreeRegressor as its base estimator.

Neural Network - MLP Regressor ended up with the same precision score, however because it took 10x more in training time, I have delegated it to 2nd place.

Github link to the full Capstone Project

We build a recommender system from the ground up with matrix factorization for implicit feedback systems. We then deploy the model to production in AWS.

We build a recommender system from the ground up with matrix factorization for implicit feedback systems. We put it all together with Metaflow and used Comet...

Building and maintaining a recommender system that is tuned to your business’ products or services can take great effort. The good news is that AWS can do th...

Provided in 6 weekly installments, we will cover current and relevant topics relating to ethics in data

Get your ML application to production quicker with Amazon Rekognition and AWS Amplify

(Re)Learning how to create conceptual models when building software

A scalable (and cost-effective) strategy to transition your Machine Learning project from prototype to production

An Approach to Effective and Scalable MLOps when you’re not a Giant like Google

Day 2 summary - AI/ML edition

Day 1 summary - AI/ML edition

What is Module Federation and why it’s perfect for building your Micro-frontend project

What you always wanted to know about Monorepos but were too afraid to ask

Using Github Actions as a practical (and Free*) MLOps Workflow tool for your Data Pipeline. This completes the Data Science Bootcamp Series

Final week of the General Assembly Data Science bootcamp, and the Capstone Project has been completed!

Fifth and Sixth week, and we are now working with Machine Learning algorithms and a Capstone Project update

Fourth week into the GA Data Science bootcamp, and we find out why we have to do data visualizations at all

On the third week of the GA Data Science bootcamp, we explore ideas for the Capstone Project

We explore Exploratory Data Analysis in Pandas and start thinking about the course Capstone Project

Follow along as I go through General Assembly’s 10-week Data Science Bootcamp

Updating Context will re-render context consumers, only in this example, it doesn’t

Static Site Generation, Server Side Render or Client Side Render, what’s the difference?

How to ace your Core Web Vitals without breaking the bank, hint, its FREE! With Netlify, Github and GatsbyJS.

Follow along as I implement DynamoDB Single-Table Design - find out the tools and methods I use to make the process easier, and finally the light-bulb moment...

Use DynamoDB as it was intended, now!

A GraphQL web client in ReactJS and Apollo

From source to cloud using Serverless and Github Actions

How GraphQL promotes thoughtful software development practices

Why you might not need external state management libraries anymore

My thoughts on the AWS Certified Developer - Associate Exam, is it worth the effort?

Running Lighthouse on this blog to identify opportunities for improvement

Use the power of influence to move people even without a title

Real world case studies on effects of improving website performance

Speeding up your site is easy if you know what to focus on. Follow along as I explore the performance optimization maze, and find 3 awesome tips inside (plus...

Tools for identifying performance gaps and formulating your performance budget

Why web performance matters and what that means to your bottom line

How to easily clear your Redis cache remotely from a Windows machine with Powershell

Trials with Docker and Umbraco for building a portable development environment, plus find 4 handy tips inside!

How to create a low cost, highly available CDN solution for your image handling needs in no time at all.

What is the BFF pattern and why you need it.